Member's

Area

Projects

Kevin's A.I. Corner

Beginners

Guide Links

Frank N. Stein

Fighting Robots

Neural

Pages Gary's

Tech Corner Motor

Tech Corner Movie

Robots Down loads

Product

Report

TRaCY

Users Parts

Trader Electronic

Basics International

Robot Builders Knight

Rider

Club

News Tools

Join

us at TRCY Robotic

Arms Failures

|

![]()

Back to NeilChak's Neural page

This example is from the first home work of the Short Course on Neural Networks by Dr Klinkachorn of the Computer Engineering Department at WVU. Please note the context of the problem and the problem statement has been changed to relfect the interests of this robot club.

You can read about the history of how the perceptron was developed by Dr Rosenblatt in the 1950's.

You can read about the theory of operation of a perceptron neural network.

Now for our first example a 2 dimentional Perceptron:

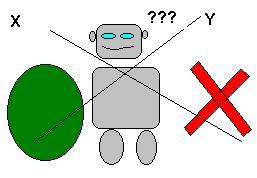

In this example the perceptron is asked to separate two groups of points on an X Y coordiante axis. The points in one group are identified by an X and the points in the other group are identified by an O. In the graphics of the program and in the illistrations that follow.

Now it may help to think of the X,Y Coordinate pairs as being the output of a robot sensor. Imagine for a moment that we have a robot that has a scanner that lookes around the room the robot is in and returns X, Y numbers for reflected signals in the room.

What we want is for the robot to know if the signal came from a point on the X or a point on the O. The senor itself does not know, it just returns X,Y numbers from the coordinate axis with the robot in the center.

This problem is a 2 d problem since there are only two inputs the X and the Y number from the sensor. So when we ask the perceptron network to solve this and tell which is which it can do this by drawing a line. The line is implimented into its logic and it must learn what the line should be on its own.

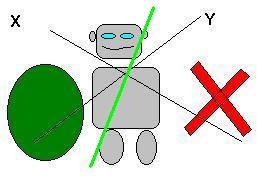

For you and me this problem is simple we would just draw a line like so.

The green line represents our desision line. Any coordinate pair that is on the left side would be the O. Any coordiante pair on the right side would be the X.

Well thats easy for us to do. But what about the perceptron network? How does it learn to draw a line like this?

Training is the Key

We the people who put the net work together, must provide training examples for the network to learn from. For the moment let us assume the problem is more complex and we dont know the line. (Of couse here we do know but as the problems get harder we want the network to figure out the answers to question that we cannot answer.)

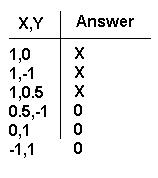

Even if we dont know how to draw the line the perceptron network can figure it out. We just have to give it some examples first. Like this:

Then the perceptron starts out not knowing how to separate the answers so it guesses. For example we input into the network 1,0 and it guesses an O. But the right answer is an X. So the perceptron adjusts its line and we try the next example. Eventually the perceptron will have all the answers right.

So what's so cool about this?

One cool thing about this is that once the network learns the example set above it will be able to give mostly right answers about coordinate number pairs that were not in the example set by "generalizing" the idea.

Another cool thing is that the trained perceptron network once trained is really only one or two lines of code. That means that even though as in this overdone example we might have hundreds of lines of code to build and train the network the resulting network is just a couple lines of code. So the completed robot only needs a couple of lines of code to use the neural network. Now if being simple is good, then here we have a one or two lines of code that can generalize ideas! Just think of where you can go from here with more complex stuff.

Still more cool things about this is that we dont have to use code at all to use the network. What you say how can this be? It's true since you can build a neural network from transistors. The trick here is to build a transistor circuit that acts like the neural net in the code simulation. This can be done but would require writing the code to simulate transistors as part of the perceptron impliemenation. This is not too hard but does take some work.

Download the example programs and code

To use the program unzip it and run p1.exe a dos program. Press the space bar to see the next step or press escape to quit. The source code is included.

p1.zip about 50k.

Back to NeilChak's Neural page

![]()

If I am using a picture or text that you believe only you should have rights to, then e-mail me and I will rectify the problem.